Lecture notes from biophysics professor Sarah Marzen

How to build a model that respects physical and biophysical assumptions when you have no idea what is going on….

Lecture notes from biophysics professor Sarah Marzen

How to build a model that respects physical and biophysical assumptions when you have no idea what is going on….

A workshop series on machine learning using tools in python is starting this Thursday (Oct 20th) at HMC. If you are interested, please find more information below and register using this link.

What: A Hands-on Workshop series in Machine Learning

Who: All 7C students

Instructor: Dr. Aashita Kesarwani

When: 3-5 pm PST on Tuesdays and Thursdays from Oct 20th, 2022 to Nov 10th, 2022 (7 sessions in total)

Where: Shanahan 3485 (Grace Hopper Conference room) or remotely via Zoom (link will be shared when you register)

The workshop series is designed with a focus on the practical aspects of machine learning using real-world datasets and the tools in the Python ecosystem and is targeted towards complete beginners familiar with Python.

You will learn the minimal but most useful tools for exploring datasets using pandas and then be gently introduced to neural networks. You will also learn various architectures such as Convolution Neural Networks (CNN), Recurrent Neural Networks (RNN), transformer-based models, etc., and apply them to real-world textual and image datasets.

Please register using this Google form to save your seat. It is highly recommended to attend the workshop in person as you will be coding in groups and participating in discussions, but there is an option to join remotely via Zoom. The Zoom link and the recordings for each session will be shared with the registered participants. Please have a look here for more information, including the topics to be covered. You are free to attend some of the sessions while skipping others if you are already familiar with certain topics.

Sarah Marzen, Ph.D.

W. M. Keck Science Department

Biophysics is “searching for principles”, and boy is it data-heavy

There have been a plethora of data-gathering advances in biophysics that allow us to probe at the single molecule or single cell or organism or even population level. We can specify a protein’s position as a function of time from super-resolution microscopy readouts. We can specify the number of microbes of each type as a function of time in a microbial population. We can specify, with the advent of artificial intelligence, the exact positioning and behavior of a worm or a fly as a function of time. We can even specify brain activity as a function of time to varying degrees of spatial and temporal precision. Some of the best data sets in the world are available online for anyone to analyze.

What do we do now? How do we make sense of all this data?

If we could only make sense of all this data, we would have a Holy Grail of biophysics: a quantitative theory of life.

When wrestling with the data, it immediately becomes obvious that one can build highly predictive models that impart almost no understanding. When I was a postdoc at MIT, I gratefully worked for Nikta Fakhri on a project that involved fluorescent readouts of a particular protein in starfish eggs. The protein made beautiful spiral patterns as it moved through the cell, and we wanted at first to just predict the next video frames from the past video frames. We were hopeful and somewhat convinced that a predictive model would lead to new explanations of what was going on in the cell.

Boy, were we wrong.

We could easily build wonderful predictive models. A combination of PCA and ARIMA models did quite well. A combination of PCA and reservoir computing did even better. Then, a CNN and LSTM beat even that, eking out ever smaller gains in predictive accuracy.

But what had we learned?

In physics, there is the 80/20 rule: that we want a rule that with 20% effort nails 80% accuracy. When Copernicus proposed that the Earth revolved around the Sun, his theory did not produce better predictions than the theories with epicycles of epicycles and the Sun revolving around the Earth. However, the epicycles of epicycles were so much more complicated that the simpler rule that could basically nail the predictions was preferable. (Actually, Copernicus thought that the Earth’s orbit was circular, and so added an epicycle in — but still.)

So, too, with starfish oocytes. Tzer-Han, then a graduate student in the lab, added expert knowledge into the model of proteins swirling around on the surface of the starfish oocyte. He hypothesized that these protein concentrations obeyed a reaction-diffusion equation, and all we had to do was fit the reaction term. This worked– not quite as well as the complicated models of before in terms of predictive accuracy, but with some interpretability.

The lesson from this? There is a surplus of data, but what is needed is more than just model.fit and model.predict. Data science requires models with explanatory power to solve the Holy Grail of biophysics and many other fields.

The Claremont Colleges Library is pleased to announce the establishment of the Claremont Colleges Data Science Hub this fall.

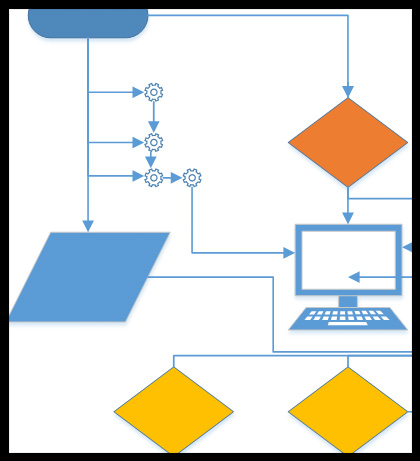

The DS Hub is intended to support the collective interests and aims of the seven Claremont Colleges in their efforts to develop research and teaching in rapidly-growing data science fields. Following a 3-year evaluation and study process directed by the Office for Consortial Academic Collaboration (OCAC), the Library was proposed as a communal site to host essential support activities such as workshops, consultations, faculty presentations, and a computing lab open to the 7Cs community.

The Library will strive to develop this hub as an inclusive and collaborative support system for the teaching and learning of data science tools and approaches across disciplines and skill levels. The DS Hub is currently under the guidance of Data Science and Digital Scholarship Coordinator Jeanine Finn and will be administered as part of the Digital Strategies and Scholarship Division.

Please look out for more information as we develop our physical space and program plans during the 2021-2022 academic year.

ITHAKA and JSTOR Labs are inviting scholars to explore their new text and data mining platform Constellate.

Constellate is a text analytics platform aimed at teaching and enabling new and established researchers to text mine. Two of ITHAKA’s services, JSTOR and Portico, are the initial sources of content for the new platform, which now includes Chronicling America, collections from Documenting the American South, the South Asia Open Archives and Independent Voices from Reveal Digital.

The platform incorporates Jupyter Notebooks for tutorials and open-source analytical tools and hosts a lively community of practice for scholars wanting to develop their TDM skills with Python.

Who: All 7C students & faculty

When: 5:45 to 7:45 pm on 7 consecutive Wednesdays from Oct 2nd to Nov 13th

Where: Aviation Room, Hoch-Shanahan Dining Commons, HMC

Why: To learn machine learning techniques and related tools in Python!

More information:

The workshop series is designed with a focus on the practical aspects of machine learning using real-world datasets using the tools in the Python ecosystem. It is targeted towards complete beginners familiar with Python but is also designed adaptively so that you will be challenged even if you have some familiarity with machine learning tools.

You will learn the minimal but most useful tools for exploring datasets using pandas quickly and then move on to the conventional machine learning algorithms and other related concepts that comes in handy for all models including neural networks. The neural networks will be introduced gently from the fourth session onwards and you will learn some more involved architectures such as Convolution Neural Networks (CNN) and apply them to real-world datasets. The sessions will be a good mix of theory explained intuitively in a simplified manner and hands-on exercises.

If you are interested, please have a look here for more information on the series, including the topics to be covered. Seats are limited, please register using this link. The only prerequisites are python programming and basics of probability and statistics. It is important that you attend all the sessions of the series for it to be useful.

Pomona mathematics professor Jo Hardin has spent the summer collaborating with data science colleagues Hunter Glanz (Cal Poly, San Luis Obispo) and Nick Horton (Amherst) on an ambitious post-a-day-project building the Teach Data Science blog.

The blog is filled with useful resources and reflections on teaching data science, designed to ease the learning curve for faculty engaging in this interdisciplinary space.

Jo’s recent post on Data Science for Good compiles some excellent resources for educators trying to incorporate a more critical approach to data science topics, with links to a number of groups and projects looking at the relationship between big data projects and social justice outcomes.

Update: The conference was a great success with a large and enthusiastic turnout!

Presenter slides and other information will be made available as we receive it.

Thanks to all the presenters and attendees for a lively conversation and great collaborative spirit!

You are warmly invited to join us for a Data Science Social Hour, Tuesday, May 14, 4:00-5:00 PM, in the Founders Room of the The Claremont Colleges Library – to include hors d’oeuvres and libations!

As you may know, a group of faculty and staff from across the colleges have been working together through the Office of Consortial Academic Collaboration to explore developing a Claremont Data Science curriculum and programming faculty development opportunities. We would like to share some of our accomplishments over the past year and hear from you about what you’d like to see at the 7Cs. We are also hopeful to connect shared data science research and teaching interests across the colleges.

Please let us know you’re coming with an RSVP.

The Office of Consortial Academic Collaboration has launched a survey to support development of 7C systems, programs and resources that will directly address identified faculty interests and needs pertaining to data science.

The 7C data science survey will only take a few minutes of your time – Thank you!